From Chatbots to Autonomous Problem-Solvers: The Rise of Agentic AI

If you’ve been working with AI for the past couple of years, you’ve probably noticed something. We’ve moved beyond the initial excitement of generative AI—those impressive chatbots that could write essays or answer questions based on a single prompt. The technology was remarkable, but it was fundamentally reactive. You asked, it answered. Simple as that.

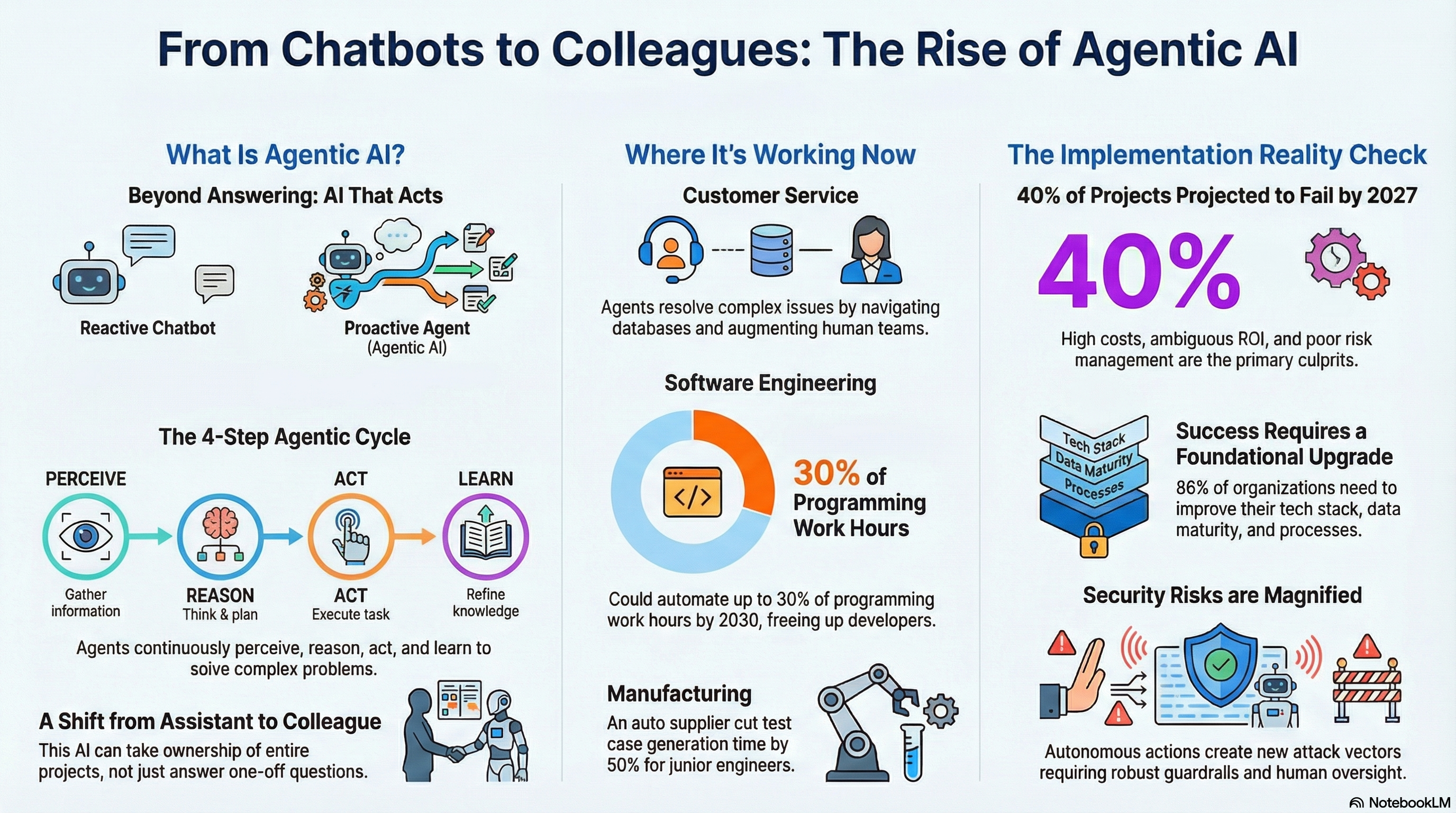

Now we’re entering a different phase entirely. Agentic AI represents a shift from tools that respond to tools that actually think ahead, plan multiple steps, and execute complex workflows with minimal oversight. It’s the difference between having a helpful assistant who answers your questions and having a colleague who can take ownership of entire projects.

What Makes Agentic AI Different

The distinction matters more than you might initially think. Traditional generative AI operates in a one-shot model: you provide a prompt, the model generates a response, and that’s the end of the interaction. Agentic AI, by contrast, engages in a continuous cycle of perception, reasoning, action, and learning.

Here’s how the four-step process actually works in practice. First, the agent perceives its environment by gathering and processing data from various sources—databases, APIs, sensors, whatever’s relevant to the task. Then it reasons through the problem using large language models as orchestration engines, breaking down complex goals into actionable steps. The action phase is where things get interesting: agents don’t just suggest solutions, they execute them by interfacing with external tools and software systems. Finally, they learn from each interaction, creating what’s often called a “data flywheel” where outputs feed back into the system to improve future performance.

This architecture enables something we haven’t really seen before at scale: AI systems that can handle multi-step problems autonomously. A customer service agent, for instance, doesn’t just answer a question about an account balance. It can check the balance, analyze which payment methods are available, recommend specific options based on the customer’s history, and then complete the transaction when the customer gives the go-ahead.

The Technical Reality Behind the Hype

Let’s be honest about what’s actually happening under the hood. Agentic AI leverages large language models as reasoning engines, but the real innovation lies in how these systems coordinate multiple specialized models for specific functions. Retrieval-augmented generation plays a crucial role here, allowing agents to access proprietary data sources and deliver contextually accurate outputs without hallucinating.

The challenge—and this is where a lot of early implementations are stumbling—is creating robust orchestration mechanisms. Modern frameworks like LangChain, AutoGen, and CrewAI represent a fundamental departure from symbolic AI traditions. They’re not implementing classical perception-planning-action-reasoning loops. Instead, they’re orchestrating generative pipelines where pre-trained models act as central executives coordinating tasks through stochastic generation and prompt-driven orchestration.

What this means practically is that you can’t just bolt agentic AI onto your existing infrastructure and expect magic to happen. Companies learning this the hard way have discovered that nearly 40% of agentic AI projects are projected to fail by 2027, according to Gartner. The culprits? High implementation costs, ambiguous ROI, and inadequate risk management for autonomous systems.

Real-World Applications Starting to Deliver

Despite the challenges, we’re seeing genuine traction in specific domains where the value proposition is clear and the constraints are well-defined.

In customer service, companies are moving beyond simple chatbot interactions to agents that actually resolve complex issues. These systems can navigate multiple databases, apply business logic, and escalate appropriately when human judgment is required. The key insight here is that successful implementations don’t try to replace human agents entirely—they augment them by handling routine work while escalating nuanced situations.

Software engineering is another area where agentic AI is making inroads. Developers are using autonomous coding agents not to replace engineers but to automate the repetitive parts of the job. By some estimates, AI could automate up to 30% of programming work hours by 2030, freeing developers to focus on architectural decisions and complex problem-solving rather than boilerplate code and routine debugging.

Healthcare applications are particularly fascinating because they require such careful handling of risk and compliance. AI agents are being deployed to help doctors analyze vast amounts of medical literature and patient data, distilling critical information for better-informed care decisions. The Epic demo of an AI health professional that could assess post-surgical recovery through video examination shows where things are heading, though it’ll be years before such systems are widely available given the regulatory hurdles.

Manufacturing and industrial settings are seeing perhaps the most measurable impact. An automotive supplier recently implemented an agentic system for generating test case descriptions across hundreds of complex requirements. The result? A 50% reduction in time for certain requirement types, particularly benefiting junior engineers who previously spent hours on these tasks. The supplier chose to build a custom system rather than use off-the-shelf solutions, and they had it deployed within weeks.

The Implementation Reality Check

Here’s what the successful implementations have in common: they started small, scaled deliberately, and invested heavily in change management. The companies that struggled tried to treat agentic AI as a plug-and-play solution.

One survey found that 86% of organizations need to upgrade their existing technology stack—and reevaluate their structures and processes—before they can effectively deploy AI agents. This isn’t just about having the latest GPUs or cloud infrastructure. It’s about data maturity, API accessibility, and governance frameworks.

The data challenge deserves particular attention. Agentic AI systems need access to clean, structured, and current data across multiple sources. Many enterprises still have siloed data, missing metadata, or outdated records. Without unified data pipelines and robust governance, agents will hallucinate, misfire, or require constant human intervention—defeating the entire purpose.

Security concerns compound these issues. When you’re giving AI systems the ability to take autonomous actions across your infrastructure, you’re opening up new attack vectors. The infamous case of cybercriminals using AI deepfakes to authorize $25.6 million in fraudulent wire transfers should give any CTO pause. Successful implementations involve extensive adversarial testing, clear guardrails on autonomous actions, and human-in-the-loop oversight for critical decisions.

The Architectural Challenge

Building effective agentic systems requires what some are calling an “AI mesh”—a distributed architecture built on principles of composability, distributed intelligence, layered decoupling, and vendor neutrality. The goal is to create systems where tools, models, and agents can be added without rebuilding the core infrastructure.

This approach stands in stark contrast to the monolithic deployments many organizations attempted with their first wave of AI projects. The mesh architecture enables agents to coordinate and divide tasks across networks while maintaining modularity and avoiding vendor lock-in.

But here’s the thing that often gets overlooked: you also need to shift from use-case-specific data pipelines to reusable data products. Organizations that succeed with agentic AI typically implement data streaming platforms that can collect, process, and transmit data in real-time from multiple sources. Apache Kafka and similar technologies become critical infrastructure, not nice-to-have additions.

What’s Actually Working

The most successful agentic AI implementations share a few characteristics. They focus on specific business processes with clear boundaries and measurable outcomes. They invest as much in organizational change management as in technology. They treat agents as specialized team members with specific strengths and limitations, not as all-purpose problem-solvers.

Companies like UPS demonstrate this approach well. Their ORION routing system uses AI agents to optimize delivery routes, but the real secret to their $300 million in annual cost savings wasn’t just the algorithm—it was training drivers to work with the system and incorporating their feedback into continuous improvements.

The lesson here is that agentic AI succeeds when it’s embedded in well-designed workflows with appropriate human oversight. The technology enables new capabilities, but it doesn’t eliminate the need for domain expertise, judgment, or coordination.

Looking Ahead

By 2028, Gartner predicts that 15% of day-to-day work decisions will be made autonomously by agentic AI, up from essentially zero today. That’s a remarkable trajectory, but it’s worth noting what it doesn’t say: 85% of decisions will still require human judgment.

The future isn’t about AI agents replacing humans. It’s about AI agents handling the structured, repeatable parts of complex workflows while humans focus on the parts that require creativity, empathy, ethical judgment, and strategic thinking. The most effective organizations will be those that figure out how to orchestrate this collaboration—not those that simply deploy the most autonomous agents.

For developers and technical leaders, the opportunity is clear but the path is demanding. You need to start building competency now, even if you’re not ready for full-scale deployment. That means experimenting with agentic frameworks, understanding their limitations, and developing expertise in prompt engineering, model evaluation, and governance.

The companies that will succeed in the age of agentic AI are those that approach it not as a technology deployment but as a fundamental rethinking of how work gets done. That requires asking harder questions than “How do we add AI?” You need to ask: How should decisions be made? How should work flow? How should humans and autonomous systems collaborate effectively?

Those questions don’t have easy answers, but they’re the right ones to be asking.